THE PHILOSOPHICAL DEBATE OVER AUTONOMOUS VEHICLES IS UP IN THE AIR

We should consider more than just the technological requirements

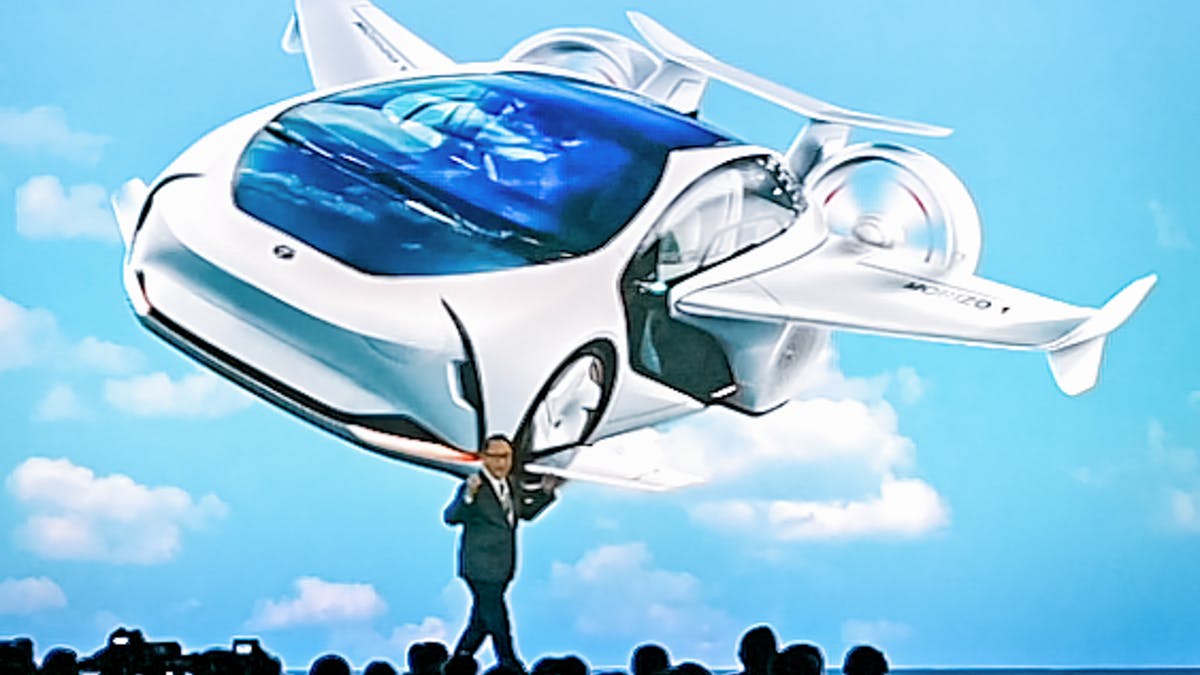

The debate over the benefits versus the liabilities of autonomous vehicles is not just limited to the ground. As you will now see, it takes on a whole new perspective up in the air, and even beyond.

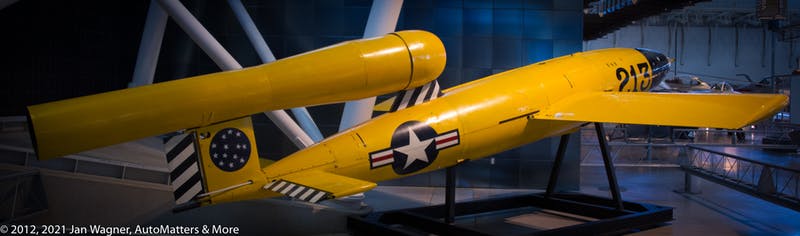

I recently read an article about a technologically advanced new version of the F-15 fighter jet, of which deliveries will soon commence to the U.S. Air Force, to replace some of their very old F-15 aircraft: www.engadget.com/boeing-f-15ex-182417866.html?utm_source=sailthru#comments

This article prompted a particularly spirited, controversial discussion and debate in the comments section, about the need for human pilots to physically be in the jets, as opposed to piloting them remotely. While cost/benefit analyses and safeguarding the lives of military pilots suggest that this is the better way to go, there are also issues of morality related to that activity to consider.

Some of you fellow old-timers might remember a particular episode of the original 1960s “STAR TREK” TV series that could provide helpful insight into this moral debate.

As I recall that episode, two neighboring planets had been at war with each other for generations. Thanks to technological advances, their never-ending conflict had long since evolved beyond actually fighting with each other in person. Instead they had their technology do that virtually, so as to prevent immediate harm to any of their people, and physical damage to anything. Their being so removed from the violence of war also minimized disruption to their daily lives and economies, keeping things rolling along.

The results of their virtual military confrontations were tallied up, and then predetermined citizens of each of the warring planets voluntarily walked into dematerialization chambers, thus ensuring very real, but sterile, outcomes to their virtual encounters on the battlefield.

This conflict had continued for generations, becoming the societies’ normal way of life, until the crew of the USS enterprise intervened and forced the warring parties to suffer immediate, severe consequences of their battles.

Faced with these additional, serious consequences of their conflict, the two societies soon saw the folly in fighting remotely. They decided to end their war soon thereafter.

As the saying goes, “War is hell.” Keeping it that way is a powerful deterrent to war.

So, how does that relate to a discussion about autonomous automobiles? Let’s assume that the required technology will be mature and reliable.

Just as in STAR TREK’s warring planets episode, there will impacts on society — and perhaps unintended consequences — to consider. The artificial intelligence (AI) of the technology did not account for (or even consider) the psychological and emotional effects on the people. When people are in control of their destiny in life or death situations, they may not make the same decisions that a cold, calculating, unfeeling, algorithm-dependent AI would.

In a particularly dire, developing situation that will likely culminate in a serious, potentially life-threatening driving accident, there may be no good, obvious, easy choices. Would a human driver make the same choices about whose life is more important that an AI might make?

Recall the “Captain Dunsel” episode of STAR TREK where, during the 2268 war games test of the M-5 Multitronic Unit, a computer took over all ship’s operations and controlled all maneuvers of the USS Enterprise — with disastrous consequences. FYI, “Captain Dunsel” described a part that serves no useful purpose: memory-alpha.fandom.com/wiki/Dunsel

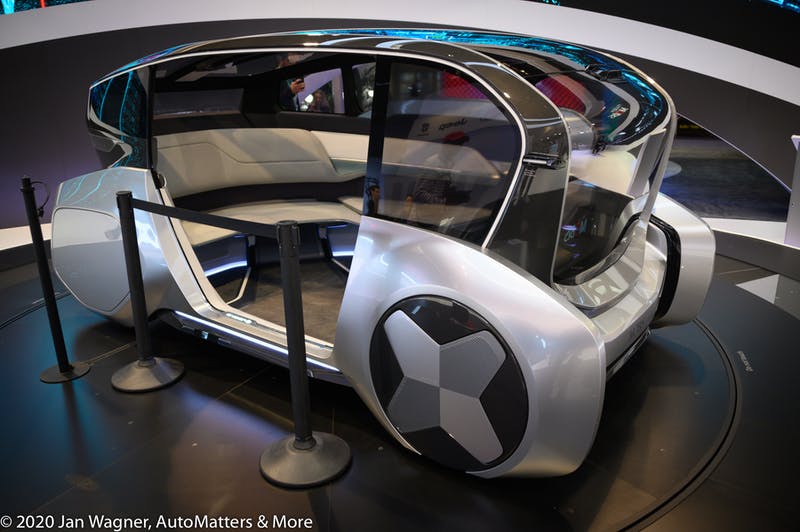

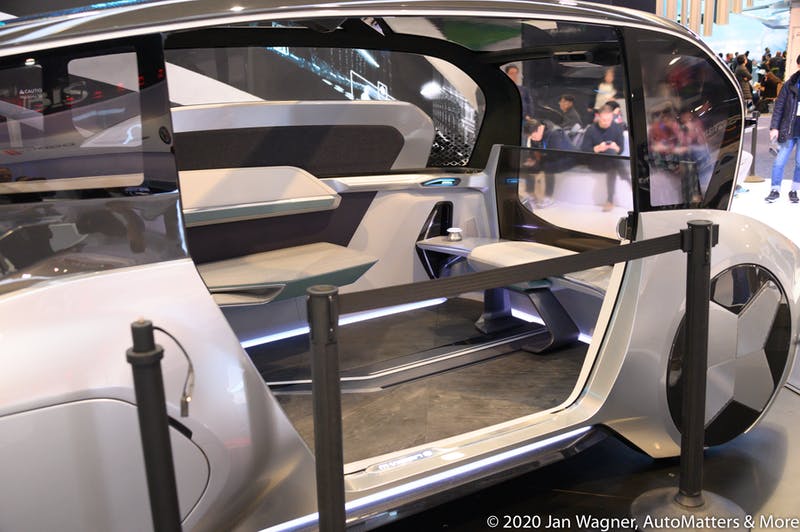

In SAE Level 5 automotive autonomy, vehicles – without so much as a steering wheel – will be able to drive themselves in all driving environments and at all times, with no input or oversight from their human occupants. Even though such sophisticated self-driving autonomy would significantly reduce traffic accidents, would you be okay with giving up your control of the driving for the greater good?

At what point might we give up so much of our ability to do things ourselves — to be in control of our own destinies — that we might become irrelevant, like STAR TREK’S “Captain Dunsel,” essentially conceding that our AI machines would do a better job than we could? At what point should we say ‘enough is enough?’

Let us seriously consider the unintended consequences, while we still have a say in the matter. This may be a slippery slope, from which there may be no turning back — a point of no return. What say you?

COPYRIGHT © 2021 BY JAN WAGNER – AUTOMATTERS & MORE #679R2